Pharo presentation at Smalltalks 2014, Cordoba, Argentina

Towards Live Programming in ROS with PhaROS and LRP (DSL Rob 2014)

In traditional robot behavior programming, the edit-compile-simulate-deploy-run cycle creates a large mental disconnect between program creation and eventual robot behavior. This significantly slows down behavior development because there is no immediate mental connection between the program and the resulting behavior. With live programming the development cycle is made extremely tight, realizing such an immediate connection. In our work on programming of ROS robots in a more dynamic fashion through PhaROS, we have experimented with the use of the Live Robot Programming language. This has given rise to a number of requirements for such live programming of robots. In this text we introduce these requirements and illustrate them using an example robot behavior.

This presentation has been given at the DSL Rob Workshop held as part of the SIMPAR 2014 conference (Bergamo, Italy).

Controlling robots with PhaROS

An example of a robotic guide at Ecole des Mines de Douai.

Performance Benchmarking for Multi-Robot Exploration

Towards Live Programming in ROS with PhaROS and LRP

In this tutorial we will show you how to programs robot behaviour on using Live Robot Programming over PhaROS.

Setup

- Follow the steps 1 to 4 of this post.

-

Create a ROS node that consumes

/kompai2/poseand/kompai/scanand publish in/command_velocity. To do this, just executing this:LrpharosPackage uniqueInstance -

Create an instance of the

RobulabBridgeclassRobulabBridge uniqueInstance - To assure that everything is fine, inspect the instance of

RobulabBridgeand check that its instance variablelaserDatais not nil and its values change over the time. - Open the LRP UI by right-clicking the World and selecting Live Robot Programming.

Stop when an obstacle is detected

-

Ok, so now we can start writing the behavior. First we will need some variables, those are:

robulabto manage the robot and some constants such as:f_velas linear velocity,t_velfor angular velocity, andmin_distanceas the minimum distance between the robot and an obstacle.(var robulab := [RobulabBridgr uniqueInstance ]) (var min_distance := [0.5]) (var f_vel := [0.25]) (var t_vel := [0.5]) -

We define the state machine called Tito.

What we want to the robot is to go forward unless there is an obstacle in front of it so it should stop and turn to avoid it.

This could be modelled in a abstractly as two states:forwardandavoid.(machine Tito ;; States (state forward (onentry [robulab value forward: f_vel value]) ) (state stop (onentry [robulab value stop]) ) ;; Transitions (on obstacle forward -> stop t-avoid) (on noObstacle avoid -> forward t-forward) ;; Events (event obstacle [robulab value isThereAnObstacle: min_distance value]) (event noObstacle [(robulab value isThereAnObstacle: min_distance value) not]) ) -

Finally, to run it, just start the machine on the

forwardstate.

(spawn Tito forward) - The robot should move linearly and stop when detects an obstacle.

Avoiding obstacles

Let’s add an avoiding behavior. A simple one might be turning until it detects there is no obstacle and go forward again.

Then a simple behavior that match the avoidance requisite is:

- If the obstacle is in the left side of the front: turn right

- If the obstacle is in the right side of the front: turn left.

RobotBridge provides two methods to detect obstacles on the left and right part of the front of the robot: RobotBridge>>isThereARightObstacle: and RobotBridge>>isThereALeftObstacle:

Then, the idea is to turn left if there is an obstacle in the front-right, or, turn right if there is an obstacle in the front-left.

-

Add the following states

(state turnLeft (onentry [robulab value turn: t_vel value]) ) (state turnRight (onentry [robulab value turn: t_vel value negated]) ) -

Add the corresponding transitions

(on rightObstacle stop -> turnLeft t-lturn) (on leftObstacle stop -> turnRight t-rturn) (on noObstacle turnLeft -> stop t-tlstop) (on noObstacle turnRight -> stop t-trstop) -

And add the events

(event rightObstacle [robulab value isThereARightObstacle: minDistance value]) (event leftObstacle [robulab value isThereALeftObstacle: minDistance value]) - Now the robot will start turning to avoid the obstacle.

Note

Updated version of LRP it is not necessary to add value after a variable.

Then,

(onentry [robulab value turn: t_vel value negated])

is turned to

(onentry [robulab turn: t_vel negated])

making it more readable.

Getting current pose of robot using tf listener

Our coordination framework for multi-robot exploration needs to know the current robot’s pose (position and orientation) within the explored map frame.

There are two ways to achieve it:

1 – Using costmap function.

bool costmap_2d::Costmap2DROS::getRobotPose(tf::Stamped& global_pose) const

2 – Using tf listener.

geometry_msgs::PoseStamped pose_stamped;

pose_stamped.header.stamp = ros::Time::now();

pose_stamped.header.frame_id = tf_prefix + "/" + map_frame;

pose_stamped.pose.position.x = transform.getOrigin().getX();

pose_stamped.pose.position.y = transform.getOrigin().getY();

pose_stamped.pose.position.z = transform.getOrigin().getZ();

pose_stamped.pose.orientation.x = transform.getRotation().getX();

pose_stamped.pose.orientation.y = transform.getRotation().getY();

pose_stamped.pose.orientation.z = transform.getRotation().getZ();

pose_stamped.pose.orientation.w = transform.getRotation().getW();

pose_publisher.publish(pose_stamped);

A complete implementation of the second method can be found http://wiki.ros.org/pose_publisher.

Both methods need a transform from “map” to “odom” (gmapping can do this).

Our coordination framework will be released after the corresponding paper has been published.

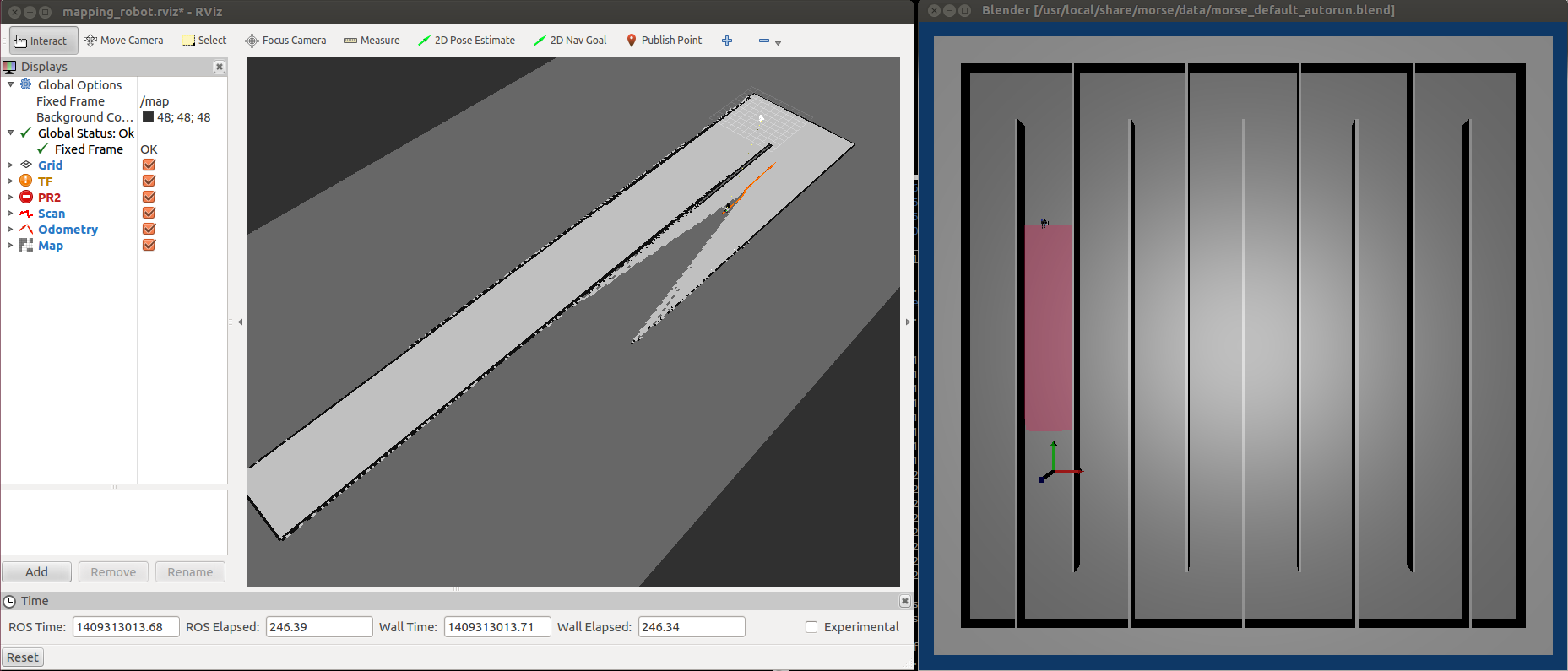

Manually building an environment map in MORSE

To check the accuracy of the exploration map, we need to compare with a pre-built one.

Of course, the latter needs to have a good accuracy.

We provide here a tool to manually build an environment map in MORSE.

This tool has the following features:

- Map building using gmapping ROS package.

- Robot with perfect odometry.

- Visualize the mapping process using rviz ROS package.

Robulab’s first lesson of LRP through PhaROS

The objective of this tutorial is to be able to create a behaviour for the Robulab described using Live Robot Programming. The LRP program transparently uses PhaROS to communicate with the Robulab.

Let’s do it step by step

- Follow the instructions to have Robulab working specified in this tutorial.

-

Open the image you created on the previous step and download the LRP code1

Gofer it smalltalkhubUser: 'jfabry' project: 'LiveRobotProgramming'; configuration; loadDevelopment -

Download the code with the example by executing the following snippet on a workspace:

Gofer new smalltalkhubUser: 'mcamp' project: 'RobotExperiments'; package: 'LrpharosPackage'; load. -

Let’s check everything is ok before launching LRP UI:

- The laptop is connected to UBNT network

roscoreis running.- You have cleaned processes by executing

ProcessesCleaner clean. - You started the driver node for kompai.

-

Our example needs a PhaROS node subscribed to

/kompai2/poseand another node publishing on/command_velocity, to do so you need to create a instance ofLrpharosPackage. Due to the live feature of LRP, it needs to have an unique instance of the package (which contains the nodes).LrpharosPackage uniqueInstance -

Open the LRP UI by right-clicking the World and selecting ** Live Robot Programming **. It will open a window like this:

-

Now, copy&paste the following script into the left pane (You can find it also in

LrpharosPackage class>>lrpSimple)(var robulab := [LrpharosPackage uniqueInstance]) (var stop := [0]) (machine simple (state forward (onentry [robulab value forward: 0.1]) ) (state stop (onentry [robulab value stop]) ) (state finish (onentry [robulab value stop]) ) (on forceStop *-> finish t-finish) (event forceStop [stop value = 1]) (ontime 2000 forward -> stop t-f) (ontime 1000 stop -> forward t-s) )It should look something like this

-

Now we are almost close to launch the script. Before that you should be aware to have ways to stop it in an emergency case: have a remote joystick or just switch it off.

-

To trigger it add the following line at the end of the script:

(spawn simple forward)Et voilà! The robot will start moving forward and then stop as the two steps.

-

An alternatively way to stop the robot using the LRP UI is by setting the

stopvariable to 1 in the ** Variables: ** pane. -

After stopping the robot, if you want to re-start it you have to click Reset Int. button in the bottom of the left pane.

Any question? Feel free to ask below.

NOTES

-

LRP uses Roassal visualization engine for displaying the machines, states and transitions. After LRP is installed, you should run do a few simple steps in order to avoid a small-but-hard-to-solve bug related to fonts. You can fix it in less than 1 minute following the instructions here.

-

Each time you need to clean the proccesses through

ProcessesCleaner clean, the LRP process is terminated. Then you have to close the window after doing it. -

Everytime you create a kompai node (through

PureROS new scriptKompai1orscriptKompai2) you should then reset the LRP singleton by executing:LrpharosPackage reset.This way the LrpharosPackage instance will be bound to the correct kompai node.

Talking to Robots with Pharo

Slides of my presentation given at ESUG 2014 conference are available online (see below). It’s about Robot software development using the Pharo dynamic language. It includes a quick overview of PhaROS our bridge to the ROS, as well as BoTest our framework for TDD for robotics applications. The video is also available on Youtube (see below) thanks to ESUG student volunteers. Note it is in two parts.